Artistic style transfer stands at the intersection of technology and creativity, offering a captivating glimpse into the potential of deep learning algorithms to transform digital art. With its ability to seamlessly blend the style of one image with the content of another, this technique has captured the imagination of artists, designers, and technologists alike.

In this tutorial, we embark on a journey to master the art of style transfer using the power of deep learning. By leveraging neural networks and sophisticated algorithms, we’ll unveil the secrets behind creating captivating artworks that seamlessly merge the essence of different visual styles.

At the core of artistic style transfer lies the fusion of two key elements: content and style. Through intricate computations and iterative optimization, neural networks learn to dissect the content of an image while simultaneously absorbing the stylistic nuances of another. The result? A harmonious synthesis of artistic expression, where the essence of one artwork gracefully permeates the form of another.

Throughout this tutorial, we’ll demystify the underlying principles of style transfer, empowering you to wield this transformative tool with confidence and finesse. From setting up your deep learning environment to fine-tuning the parameters for optimal results, each step is meticulously crafted to provide a comprehensive understanding of the process.

But beyond the technical intricacies lies a realm of boundless creativity and expression. As you embark on your journey into the world of style transfer, remember that the true magic lies not in the algorithms themselves, but in the infinite possibilities they unlock. Whether you’re an aspiring artist seeking new avenues for expression or a seasoned technologist pushing the boundaries of innovation, this tutorial invites you to explore the convergence of art and technology in a manner that transcends conventional boundaries.

So, join us as we delve into the realm of deep learning for artistic style transfer—a realm where pixels become brushstrokes, algorithms become artisans, and imagination knows no limits. Through knowledge, experimentation, and a dash of inspiration, let us embark on a quest to unlock the full potential of this captivating technique and unleash our creativity upon the digital canvas.

Understanding Artistic Style Transfer

Artistic style transfer is a mesmerizing technique that marries the content of one image with the style of another, resulting in visually striking compositions that seamlessly blend the characteristics of both. At its core, this process involves the intricate interplay of content and style, orchestrated through the sophisticated computations of neural networks.

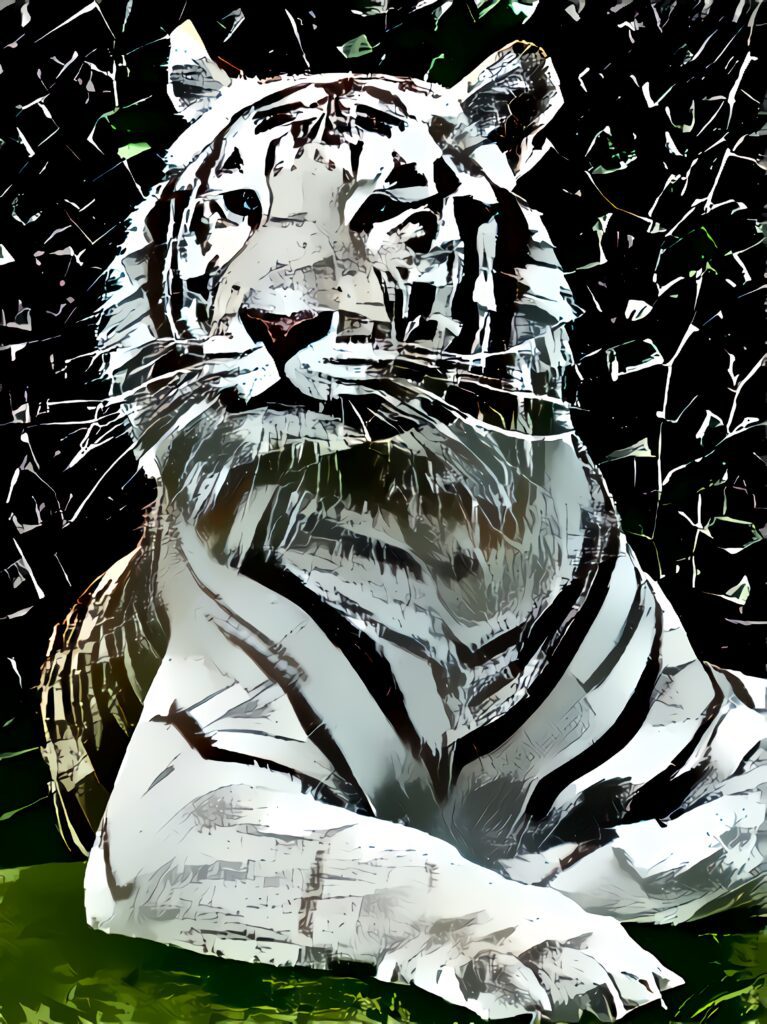

In essence, the concept revolves around the idea of reimagining an image by imbuing it with the artistic essence of another. Picture a serene landscape transformed into a masterpiece reminiscent of Van Gogh’s Starry Night or a modern cityscape infused with the bold strokes of Picasso’s cubist masterpiece. This transformative process transcends mere image manipulation, offering a gateway to a realm where artistry meets technology in a harmonious dance of creativity.

Central to the implementation of artistic style transfer are neural networks, the backbone of modern machine learning. These computational marvels are trained to recognize intricate patterns and features within images, enabling them to discern the content and style elements that define a particular artwork. Through a process known as convolution, neural networks extract hierarchical representations of visual features, capturing the essence of both content and style in a series of interconnected layers.

The magic unfolds as these extracted features are manipulated and recombined to reconstruct the target image in a manner that preserves its content while seamlessly integrating the stylistic characteristics of the reference artwork. This delicate balancing act is made possible by the intricate architecture of deep neural networks, which excel at learning complex mappings between input and output spaces.

As we delve deeper into the realm of style transfer, we encounter a rich tapestry of techniques and methodologies, each offering its own unique approach to the creative process. From the pioneering work of Gatys et al. with the seminal Neural Style Transfer algorithm to the refinement of techniques such as Fast Neural Style Transfer and Arbitrary Style Transfer, the landscape of style transfer continues to evolve with each passing day.

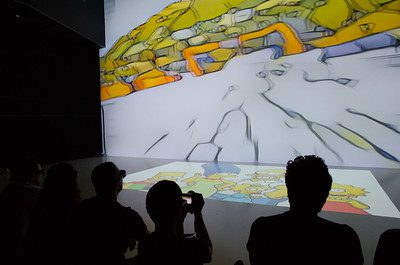

These techniques find applications across a diverse array of domains, ranging from digital art and design to photography, filmmaking, and beyond. Whether it’s transforming mundane snapshots into surrealistic masterpieces or infusing cinematic sequences with the aesthetic flair of iconic artworks, the possibilities are as limitless as the imagination itself.

In the realm of digital art, style transfer serves as a powerful tool for artists and designers seeking new avenues for expression and experimentation. It offers a means of transcending traditional boundaries, enabling creators to explore the intersection of different artistic styles and genres in a manner that redefines the very notion of visual storytelling.

As we embark on our journey to master the art of style transfer, let us embrace the transformative potential of this captivating technique. Through knowledge, experimentation, and a dash of inspiration, let us unlock the secrets of artistic expression that lie dormant within the pixels of our digital canvases.

Before diving into the exciting world of artistic style transfer, it’s essential to ensure that your environment is properly configured to handle the computational demands of deep learning. This entails installing the necessary libraries and frameworks, as well as considering hardware requirements for optimal performance.

- Installing Necessary Libraries:

To begin, you’ll need to install the requisite deep learning libraries, such as TensorFlow, PyTorch, or similar frameworks. These libraries provide the foundational tools and functionalities needed to implement style transfer algorithms and train neural networks.

For TensorFlow users, the installation process typically involves using pip, Python’s package manager, to install the TensorFlow package. Depending on your system configuration and requirements, you may choose to install the CPU-only version or the GPU-enabled version for accelerated processing.

Similarly, PyTorch users can leverage pip to install the PyTorch library, which offers a rich set of features for deep learning tasks, including style transfer. Like TensorFlow, PyTorch supports both CPU and GPU computation, allowing users to harness the power of parallel processing for faster training and inference.

- Overview of Hardware Requirements:

While style transfer algorithms can be implemented and run on standard CPU-based systems, leveraging a GPU (Graphics Processing Unit) can significantly accelerate the processing speed, especially during the training phase. GPUs excel at performing parallel computations, making them well-suited for the matrix operations and convolutional layers inherent in deep learning models.

For users considering GPU acceleration, it’s important to ensure compatibility with the deep learning framework of choice. Both TensorFlow and PyTorch offer support for GPU computation, with NVIDIA GPUs being the most widely supported. Additionally, users may need to install CUDA (Compute Unified Device Architecture) and cuDNN (CUDA Deep Neural Network Library) to enable GPU acceleration with TensorFlow and PyTorch, respectively.

When selecting a GPU, factors such as memory capacity, compute capability, and price-performance ratio should be taken into consideration. High-end NVIDIA GPUs, such as those from the GeForce RTX or Tesla series, offer substantial computational power and memory bandwidth, making them ideal for deep-learning workloads. However, budget-conscious users may opt for more affordable options, such as NVIDIA’s GTX series, which still provides respectable performance for style transfer tasks.

In summary, configuring your environment for style transfer involves installing the necessary deep-learning libraries and frameworks, as well as considering hardware requirements for optimal performance. By leveraging powerful GPU acceleration and state-of-the-art deep learning frameworks, you’ll be well-equipped to embark on your journey into the realm of artistic expression and creativity.

RELATED CONTENT – Guide on Customizing AI Art Styles

RELATED CONTENT – Negative AI Art Prompts Guide

Preparing Your Data

Before delving into the intricacies of style transfer, it’s essential to meticulously prepare your data to ensure optimal results. This involves two key steps: gathering the content and style images and preprocessing them to ensure compatibility with the neural network architecture.

- Gathering Content and Style Images:

The first step in data preparation is to select suitable content and style images that will serve as the basis for the style transfer process. Content images represent the subject matter or scene that you wish to transform, while style images embody the artistic characteristics or visual style that you aim to apply.

When choosing content images, consider selecting high-quality photographs or digital artworks that showcase the desired subject matter. These images should possess clear and recognizable features, as they will serve as the foundation upon which the stylistic elements will be overlaid.

Similarly, when selecting style images, look for artwork, paintings, or photographs that exemplify the desired artistic style or aesthetic. Whether it’s the impressionistic brushstrokes of Monet, the geometric patterns of Mondrian, or the vibrant colors of Kandinsky, choose style images that encapsulate the essence of the desired artistic expression.

It’s worth experimenting with different combinations of content and style images to explore the myriad possibilities of style transfer. By selecting diverse and visually compelling images, you’ll be able to unleash your creativity and produce captivating results.

- Preprocessing Images:

Once you’ve gathered your content and style images, it’s time to preprocess them to ensure compatibility with the neural network architecture used for style transfer. This typically involves two main steps: resizing and normalizing the images.

Resizing: Neural networks often have specific input size requirements, so it’s important to resize your images to match these dimensions. This ensures that the images are compatible with the network architecture and allows for efficient processing during training and inference. Common image sizes for style transfer tasks include 256×256 or 512×512 pixels, although the exact dimensions may vary depending on the specific implementation.

Normalization: Normalizing the pixel values of the images helps to ensure consistency and stability during the training process. This typically involves scaling the pixel values to a standardized range, such as [0, 1] or [-1, 1], and applying mean subtraction to center the pixel values around zero. By normalizing the images, you help the neural network converge more quickly and produce more visually appealing results.

Additionally, you may choose to perform other preprocessing steps, such as data augmentation or color adjustments, to further enhance the quality of the input images. However, it’s important to strike a balance between preprocessing complexity and computational overhead, as excessive preprocessing may lead to diminishing returns without significant improvements in output quality.

By carefully gathering and preprocessing your data, you lay the groundwork for successful style transfer experiments and empower yourself to explore the vast landscape of artistic expression with confidence and creativity. With your images prepared and your neural network primed for action, you’re ready to embark on the transformative journey of style transfer and unlock the full potential of deep learning in the realm of digital art.

RELATED CONTENT – How Does AI Art Work?

RELATED CONTENT – Master the Latest AI Art Techniques

Building the Neural Network Model

At the heart of artistic style transfer lies the intricate architecture of neural networks, which serve as the computational framework for translating artistic inspiration into digital reality. In this section, we’ll explore the key components of the neural network model used for style transfer and discuss the process of loading pre-trained model weights for feature extraction.

- Explanation of the Architecture:

One of the most commonly used architectures for style transfer is VGG-19, a deep convolutional neural network that has proven to be highly effective for image recognition tasks. VGG-19 is renowned for its simplicity and elegance, consisting of 19 layers, including convolutional layers, max-pooling layers, and fully connected layers.

The beauty of VGG-19 lies in its ability to capture hierarchical representations of visual features at different levels of abstraction. As information flows through the network, low-level features such as edges and textures are extracted in the early layers, while high-level features such as object shapes and textures emerge in the deeper layers.

For style transfer, we leverage the feature maps generated by intermediate layers of the VGG-19 network to capture the content and style characteristics of the input images. By isolating and manipulating these feature maps, we can reconstruct the content of the content image while infusing it with the stylistic elements of the style image.

- Loading Pre-Trained Model Weights:

Training a deep neural network from scratch for style transfer tasks can be computationally intensive and time-consuming. Fortunately, we can leverage the power of transfer learning by using pre-trained models that have been trained on large-scale image datasets such as ImageNet.

To implement style transfer with VGG-19, we typically download pre-trained model weights that have been fine-tuned for image classification tasks. These weights encode the learned features of the network, capturing rich representations of visual patterns and textures that are essential for style transfer.

Once the pre-trained model weights have been downloaded, we load them into our neural network framework, whether it’s TensorFlow, PyTorch, or another deep learning library. This step initializes the network with the learned parameters, allowing us to extract feature maps from intermediate layers and perform style transfer with ease.

By harnessing the power of pre-trained models like VGG-19, we can accelerate the style transfer process and achieve impressive results with minimal computational overhead. These pre-trained models serve as the cornerstone of our style transfer pipeline, providing the foundation upon which we can unleash our creativity and breathe life into our digital artworks.

In summary, the neural network model for style transfer, such as VGG-19, offers a powerful framework for capturing and manipulating visual features to achieve stunning artistic effects. By loading pre-trained model weights, we can leverage the knowledge encoded within the network to facilitate the style transfer process and unlock new realms of artistic expression and creativity.

Defining Loss Functions

In the realm of artistic style transfer, the essence of successful image transformation lies in the careful orchestration of loss functions—mathematical constructs that quantify the disparity between desired and generated image features. In this section, we delve into the dual facets of content loss and style loss, illuminating their roles in shaping the artistic rendition of our digital canvases.

- Explanation of Content Loss and Style Loss:

Content Loss: At its core, content loss quantifies the perceptual difference between the content of the input image and the content of the generated image. This loss function operates at a feature level, comparing the activations of intermediate layers in the neural network to assess how faithfully the generated image preserves the structural elements and semantic information of the original content.

On the other hand, Style Loss encapsulates the distinctive texture, color, and spatial distribution of visual elements that define the artistic style of a reference image. Unlike content loss, which focuses on high-level features, style loss operates at a more granular level, capturing the statistical correlations between feature maps across multiple layers of the neural network.

- Implementing Content Loss:

To implement content loss, we leverage the feature representations of the input and generated images obtained from intermediate layers of the neural network. By comparing the feature activations using metrics such as mean squared error or cosine similarity, we quantify the discrepancy between the content of the input image and the generated image.

Specifically, content loss is computed by passing both the input and generated images through the neural network and extracting the feature maps from selected layers, typically shallow layers that capture low-level visual details. We then measure the difference between the feature activations of these layers, encouraging the network to reproduce the content of the input image while minimizing the loss.

- Implementing Style Loss:

In contrast to content loss, which focuses on preserving the content of the input image, style loss aims to capture the essence of the artistic style embodied by the reference image. To achieve this, we employ techniques inspired by texture synthesis and statistical analysis to characterize the stylistic features of the reference image.

One common approach to implementing style loss is through the use of Gram matrices, which encode the correlations between feature maps within a given layer of the neural network. By comparing the Gram matrices of the style image and the generated image across multiple layers, we quantify the deviation from the desired style and adjust the network parameters accordingly.

In summary, defining loss functions for artistic style transfer involves striking a delicate balance between preserving content fidelity and capturing stylistic nuances. By implementing content loss to preserve structural elements and style loss to capture artistic characteristics, we empower the neural network to create visually compelling compositions that seamlessly blend the content and style of the input images. Through the judicious application of these loss functions, we unlock the transformative power of deep learning in the realm of digital art, ushering in a new era of creativity and expression.

Optimizing the Model

As we embark on the journey of artistic style transfer, the optimization process serves as our guiding compass, navigating the vast landscape of parameter space to converge upon visually captivating compositions. In this section, we delve into the intricate dance of setting up optimization parameters and executing the optimization process to minimize the total loss function—a pivotal step in realizing our artistic vision.

- Setting up Optimization Parameters:

At the outset, it’s crucial to fine-tune the optimization parameters to strike a delicate balance between exploration and exploitation, ensuring efficient convergence towards the desired artistic outcome. Key parameters include:

- Learning Rate: The learning rate governs the magnitude of parameter updates during optimization, influencing the speed and stability of convergence. A higher learning rate accelerates convergence but risks overshooting the optimal solution, while a lower learning rate fosters smoother optimization but may prolong the convergence process.

- Number of Iterations: The number of iterations dictates the duration of the optimization process, with each iteration refining the generated image towards the target style. Balancing computational resources and desired image quality, the number of iterations should be carefully chosen to achieve a satisfactory trade-off between convergence speed and output fidelity.

- Regularization Parameters: Additional regularization techniques, such as total variation regularization or style weight balancing, can be employed to mitigate artifacts and enhance the coherence of the generated image. These parameters help fine-tune the balance between content preservation and style fidelity, ensuring the production of visually pleasing results.

- Running the Optimization Process:

With optimization parameters meticulously configured, we embark on the iterative journey of minimizing the total loss function—a composite metric encompassing both content and style losses. The optimization process unfolds as follows:

- Initialization: We initialize the generated image as a random noise pattern or a copy of the content image, setting the stage for subsequent transformations.

- Gradient Descent: Leveraging the power of gradient descent optimization, we iteratively update the pixel values of the generated image to minimize the total loss function. At each iteration, gradients are computed with respect to the pixel values, guiding the image towards the optimal solution.

- Feedback Loop: Throughout the optimization process, we monitor the evolution of the total loss function and visualize the progress of the generated image. Fine-tuning optimization parameters based on empirical observations, we iteratively refine the generated image to better match the desired content and style.

- Convergence: As the optimization process unfolds, the generated image gradually converges towards a visually appealing composition that seamlessly blends the content and style of the input images. Convergence is typically assessed based on the stability of the total loss function and the perceptual quality of the generated image.

By meticulously fine-tuning optimization parameters and executing the optimization process with precision and finesse, we unlock the transformative potential of deep learning in the realm of artistic expression. Through iterative refinement and creative exploration, we breathe life into digital canvases, ushering in a new era of visual storytelling and aesthetic innovation.

Generating Artistic Images

With the groundwork laid and the optimization process underway, we now turn our attention to the exhilarating process of generating artistic images—a symphony of creativity and computation that brings our vision to life. In this section, we explore the application of the trained model, experiment with diverse content and style combinations, and fine-tune parameters to achieve optimal results.

- Applying the Trained Model to Generate Artistic Images:

As the optimization process progresses, the neural network learns to imbue the generated image with the stylistic characteristics of the reference artwork while preserving the structural elements of the content image. Leveraging the power of convolutional neural networks and pre-trained model weights, we witness the emergence of visually compelling compositions that seamlessly blend the content and style of the input images.

To apply the trained model, we feed the content image and style image into the network and execute the optimization process to iteratively refine the generated image. With each iteration, the generated image evolves, gradually converging toward the desired artistic rendition that encapsulates the essence of both content and style.

- Experimenting with Different Content and Style Combinations:

One of the hallmarks of artistic style transfer is its versatility and adaptability, allowing for endless permutations and combinations of content and style. By experimenting with diverse content and style images, we uncover a kaleidoscope of artistic possibilities, each offering a unique interpretation of the underlying visual elements.

Whether it’s juxtaposing the serene beauty of nature with the vibrant hues of abstract art or infusing architectural landscapes with the timeless elegance of classical paintings, the exploration of content and style combinations serves as a creative playground for artistic expression. Through experimentation and iteration, we discover new synergies and juxtapositions that breathe fresh life into our digital canvases.

- Fine-Tuning Parameters for Better Results:

As we traverse the landscape of artistic exploration, we continuously fine-tune optimization parameters and model configurations to achieve superior results. From adjusting the learning rate and number of iterations to fine-tuning regularization parameters and style weights, each parameter tweak refines the output quality and enhances the fidelity of the generated images.

Moreover, feedback from visual inspection and perceptual evaluation guides our parameter optimization efforts, enabling us to iteratively refine the generated images based on subjective preferences and artistic intuition. By embracing a cycle of experimentation, evaluation, and refinement, we elevate the quality of our artistic creations and push the boundaries of what’s possible with deep learning.

In conclusion, generating artistic images through style transfer is a dynamic and iterative process that fuses computational prowess with artistic vision. By applying the trained model, experimenting with diverse content and style combinations, and fine-tuning parameters for optimal results, we unlock the full potential of deep learning as a tool for creative expression and aesthetic innovation. Through the convergence of technology and artistry, we embark on a transformative journey into the realm of digital aesthetics, where pixels become brushstrokes and algorithms become artisans.

Evaluating and Refining Results

As we venture deeper into the realm of artistic style transfer, it becomes imperative to assess the quality of our generated images with a critical eye and refine our techniques to achieve optimal results. In this section, we explore methods for evaluating the quality of generated images through visual inspection and quantitative metrics, as well as techniques for refining our approach through parameter adjustments and model modifications.

- Assessing the Quality of Generated Images:

Visual Inspection: The first step in evaluating the quality of generated images involves a thorough visual inspection. By scrutinizing the generated images alongside the original content and style images, we can assess how effectively the neural network has captured the desired artistic style while preserving the content of the input image. Paying close attention to details such as texture fidelity, color coherence, and overall composition allows us to identify areas for improvement and refine our approach accordingly.

Quantitative Metrics: In addition to visual inspection, quantitative metrics provide objective measures of image quality and fidelity. Common metrics used for evaluating style transfer results include perceptual similarity metrics such as Structural Similarity Index (SSI) and Fréchet Inception Distance (FID), which assess the perceptual similarity between generated images and ground truth images. These metrics offer valuable insights into the accuracy and fidelity of the generated images, complementing subjective visual assessments with empirical data.

- Techniques for Improving Results:

Adjusting Hyperparameters: Fine-tuning hyperparameters such as learning rate, regularization parameters, and style weights can significantly impact the quality of generated images. By iteratively adjusting these parameters based on feedback from visual inspection and quantitative metrics, we can optimize the convergence behavior of the optimization process and enhance the fidelity of the generated images. Experimentation with different hyperparameter configurations enables us to uncover optimal settings that yield superior results.

Using Different Model Architectures: While architectures like VGG-19 are commonly used for style transfer, experimenting with alternative architectures can offer fresh perspectives and potentially improve results. Architectures such as ResNet, DenseNet, or even custom-designed networks may exhibit different capabilities in capturing content and style features, leading to diverse artistic outcomes. By exploring a diverse range of model architectures and assessing their performance through visual inspection and quantitative metrics, we can expand our toolkit and refine our approach to style transfer.

In conclusion, evaluating and refining the results of artistic style transfer is a multifaceted process that combines subjective assessment with objective metrics and iterative experimentation. By critically evaluating the quality of generated images through visual inspection and quantitative metrics and refining our techniques through parameter adjustments and model modifications, we can continuously push the boundaries of artistic expression and achieve stunning visual compositions that captivate and inspire. Through a commitment to excellence and a spirit of innovation, we unlock the full potential of deep learning as a transformative tool for digital artistry.

Conclusion

In this comprehensive tutorial, we’ve embarked on a transformative journey into the realm of artistic style transfer, exploring the intersection of technology and creativity through the lens of deep learning. As we conclude our exploration, let’s recap the key concepts learned and embrace the boundless possibilities that lie ahead.

Recap of Key Concepts: Throughout this tutorial, we’ve delved into the intricacies of artistic style transfer, uncovering the underlying principles and techniques that empower us to seamlessly blend the content and style of images. From understanding the concept of style transfer and the role of neural networks to setting up our environment, preparing data, and optimizing the model, we’ve traversed a comprehensive roadmap that equips us with the knowledge and skills to embark on our artistic journey.

Encouragement to Continue Experimenting: As we stand on the threshold of creative discovery, let us embrace the spirit of experimentation and exploration. With each stroke of the digital brush and each iteration of the optimization process, we have the opportunity to push the boundaries of artistic expression and redefine the possibilities of deep learning for visual storytelling. Let curiosity be our compass and innovation be our guiding star as we continue to explore the vast landscape of artistic style transfer.

To our fellow creators and innovators, we encourage you to share your creations, experiences, and insights with the world. Whether you’re a seasoned artist pushing the boundaries of digital art or a curious enthusiast embarking on your first style transfer experiment, your contributions enrich the collective tapestry of creativity and inspire others to embark on their own artistic journeys. Let us come together as a community to celebrate the beauty of human expression and the transformative power of technology.

In closing, let us carry forth the lessons learned and the inspiration gained from this tutorial as we venture into the ever-expanding frontier of artistic style transfer. With passion, perseverance, and a commitment to excellence, let us continue to push the boundaries of what’s possible, creating breathtaking artworks that captivate the imagination and inspire the world. The canvas awaits, and the journey is ours to embrace.

Additional Resources

As we conclude our journey into the realm of artistic style transfer, let us not forget that learning is a continuous process. To further enrich your understanding and expand your skill set in the domain of deep learning and computer vision, here are some valuable additional resources to explore:

- Research Papers:

- “A Neural Algorithm of Artistic Style” by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge: This seminal paper introduced the neural style transfer algorithm, laying the foundation for subsequent research in the field.

- “Perceptual Losses for Real-Time Style Transfer and Super-Resolution” by Justin Johnson, Alexandre Alahi, and Li Fei-Fei: This paper presents an approach to style transfer using perceptual loss functions, enabling real-time artistic rendering.

- Tutorials and Online Resources:

- TensorFlow Tutorials: The official TensorFlow website offers a wealth of tutorials and documentation on deep learning, including guides on implementing style transfer and other computer vision tasks.

- PyTorch Tutorials: The PyTorch website provides comprehensive tutorials and documentation for deep learning enthusiasts, covering a wide range of topics, including style transfer and neural network implementation.

- OpenAI: OpenAI’s research and blog posts often delve into cutting-edge techniques in deep learning and artificial intelligence, offering valuable insights and inspiration for practitioners.

- Books and Courses:

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville: This comprehensive textbook provides an in-depth introduction to deep learning theory and techniques, making it an essential resource for aspiring practitioners.

- “Computer Vision: Algorithms and Applications” by Richard Szeliski: This book offers a comprehensive overview of computer vision principles and algorithms, covering topics such as image processing, feature detection, and object recognition.

- Coursera: Platforms like Coursera offer a plethora of courses on deep learning, computer vision, and related topics, taught by leading experts in the field. Courses such as “Deep Learning Specialization” by Andrew Ng provide hands-on experience and practical insights into deep learning techniques.

By exploring these additional resources, you’ll gain deeper insights into the theoretical foundations and practical applications of deep learning and computer vision. Whether you’re a novice seeking to expand your knowledge or an experienced practitioner looking to stay abreast of the latest developments, these resources offer invaluable opportunities for growth and learning. Happy exploring!